Intelligent Plasma Shape Control in HL-3 Tokamak, the Latest Progress on Integrating Artificial Intelligence and Nuclear Fusion

Manuscript Information

Niannian Wu*, Zongyu Yang*, Rongpeng Li*,+, Ning Wei*, Yihang Chen, Qianyun Dong, Jiyuan Li, Guohui Zheng, Xinwen Gong, Feng Gao, Bo Li, Min Xu, Zhifeng Zhao+, Wulyu Zhong+, “High-Fidelity Data-Driven Dynamics Model for Reinforcement Learning-based Magnetic Control in HL-3 Tokamak,” (Nat.) Commun. Phys., Aug. 2025, accepted.

Qianyun Dong, Zhengwei Chen, Rongpeng Li, Zongyu Yang, Feng Gao, Yihang Chen, Fan Xia, Wulyu Zhong, and Zhifeng Zhao, “Adapted swin transformer-based real-time plasma shape detection and control in HL-3,” Nuclear Fusion, vol. 65, no. 2, p. 026031, Jan. 2025.

In magnetic confinement fusion, precise control of plasma dynamics and shape is essential for stable operation. We present two complementary developments toward real‑time, intelligent control on the HL‑3 tokamak, which have been accepted

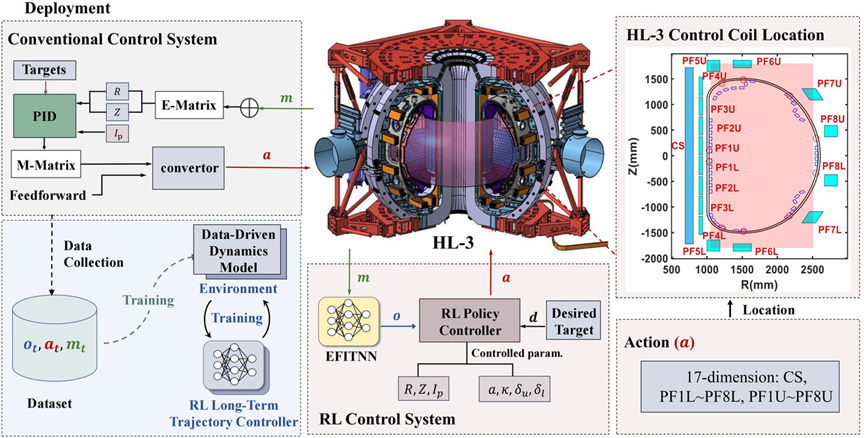

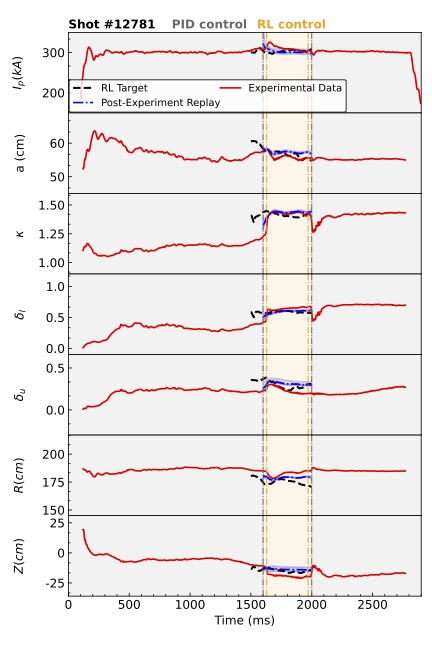

First, we build a high‑fidelity, fully data‑driven dynamics model to accelerate reinforcement learning (RL)–based trajectory control. By addressing compounding errors inherent to autoregressive simulation, our model achieves accurate long‑term predictions of plasma current and last closed flux surface. Coupled with the EFITNN surrogate for magnetic equilibrium reconstruction, the RL agent learns within minutes to issue magnetic coil commands at 1 kHz, sustaining a 400 ms control horizon with engineering‑level waveform tracking. The agent also demonstrates zero‑shot adaptation to new triangularity targets, confirming the robustness of the learned dynamics.

Figure 1: The deployment of PID and RL control systems on HL-3. The historical interactions between the PID and the HL-3 tokamak produce the dataset for learning the data-driven dynamics model, which serves as an environment for RL training.

Figure 2: Target tracking results of 400-ms RL control in Shot #12781.

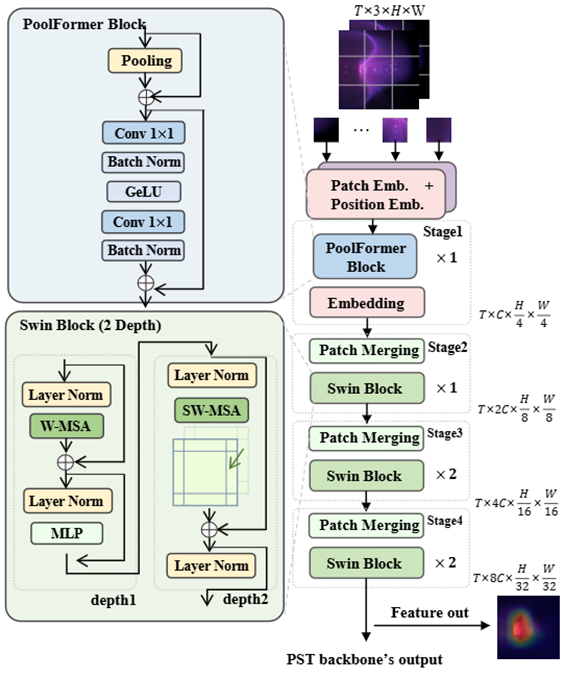

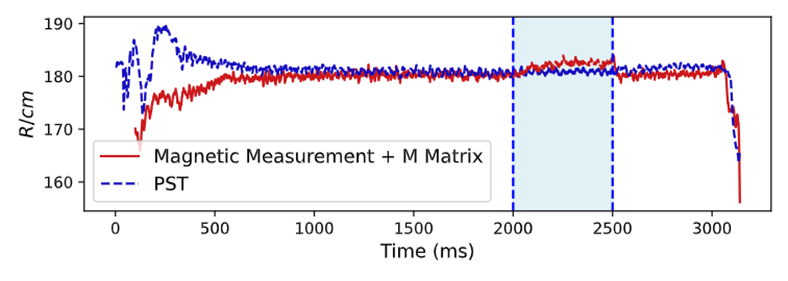

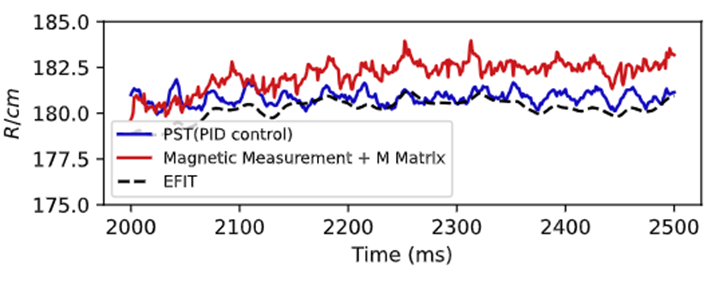

Second, we develop a non‑magnetic, vision based method for real‑time plasma shape detection. We adapt the Swin Transformer into a Poolformer Swin Transformer (PST) that interprets CCD camera images to infer six shape parameters under visual interference, without manual labeling. Through multi‑task learning and knowledge distillation, PST estimates the radial and vertical positions (R and Z) with mean average errors below 1.1 cm and 1.8 cm, respectively, in under 2 ms per frame—an 80 percent speed gain over the smallest standard Swin model. Deployed via TensorRT, PST enables a 500 ms stable PID feedback loop based on image‑computed horizontal displacement.

Figure 3: Illustration of the shared base DNN of PST.

Figure 4: Results of deploying the PST model online and implementing PID feedback control

Figure 5: A detailed comparison between the control segment of the PST model and other computational methods.

Together, these two streams lay the groundwork for a fully closed‑loop, vision‑informed RL control system. Although each module has been tested on its own, the next step is to link real‑time shape feedback with the RL‑trained coil actuator policy to enable continuous, model‑based control with minimal reliance on magnetic probes.